Large Language Models (LLMs) are reshaping how we interact with technology and consume information online.

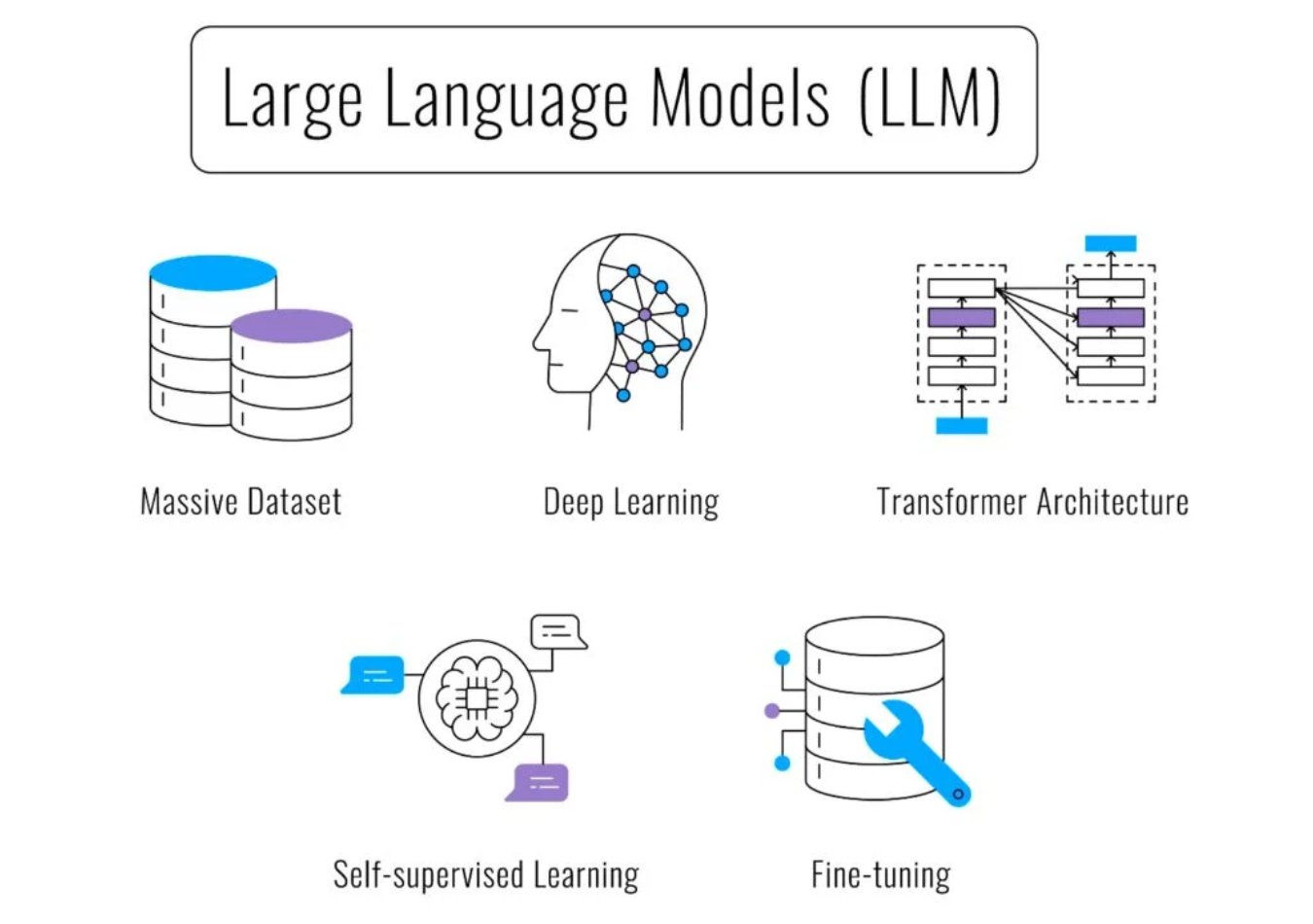

Driven by deep learning algorithms, these AI models have taken the world by storm for their remarkable ability to generate human-like text and perform a range of language-related tasks.

They’re the brains being chatbots including OpenAI’s ChatGPT. They’ve also entered Google search with Google’s AI Overviews, and are being integrated into various applications for customer service, content creation, programming assistance, and more.

The impact of LLMs extends beyond just chat interfaces; they are revolutionizing industries by streamlining workflows, enhancing productivity, and enabling new forms of creativity.

But what are LLMs, and how do they work?

This article tells you everything you need to know about large language models, including what they are, how they work, and examples of LLMs in the real world.

What is a Large Language Model (LLM)? Definition

A Large Language Model is a foundational model designed to understand, interpret and generate text using human language. It does this by processing datasets and finding patterns, grammatical structures and even cultural references in the data to generate text using conversational language.

The term "large" refers to the vast amount of data and the complex architecture used to train these models. LLMs are trained on huge datasets containing text from books, articles, websites, and other written material, allowing them to learn the nuances of language, context, grammar, and provide factual information (most of the time).

LLMs can generate coherent and contextually relevant text based on input prompts. They can perform a variety of language-related tasks, such as answering questions, summarizing text, translating languages, and even engaging in conversation. This versatility makes them valuable in numerous applications, including customer support, content creation, educational tools, and more.

However, LLMs also come with challenges, such as the potential for biases in their outputs, misinformation propagation, and ethical considerations regarding their use. The quality of a language model largely depends heavily on the quality of the data it was trained on. The bigger and more diverse the data used during training, the faster and more accurate the model will be.

How do LLMs work?

When generating responses, the LLM uses probabilistic methods to predict the next word or phrase, based on what it has learned during training. The model’s output is influenced by its training data and any biases inherent within it, which is why LLMs sometimes produce unexpected or biased responses.

Large Language Models are typically made up of neural network architectures called transformer architectures. First coined in Google’s paper "Attention Is All You Need", transformer architectures rely on self-attention mechanisms that allow it to capture relationships between words regardless of their positions in the input sequence.

Since the transformer architectures don't consider the order of words in a sequence, positional encodings are needed to provide information about the position of each token in the sequence, enabling the model to understand the sequential structure of the text.

How are LLMs trained?

During training, the model learns patterns in language by predicting the next word in a sentence or filling in missing words, based on the surrounding context. It uses a mechanism called "attention," which allows them to focus on different parts of the input text when generating output. This means that instead of just looking at individual words in isolation, the model considers the relationships between all words in a sentence.

The attention mechanism is crucial because it helps the model understand the importance of certain words relative to others, even if they are far apart in the sentence. This ability to track long-range dependencies in language is one of the reasons transformer-based models like LLMs are so powerful.

The training process also involves adjusting the weights of millions or even billions of parameters (the neural connections in the model) using a technique called backpropagation. This is done over multiple iterations as the model processes text examples and learns from its mistakes.

Once trained, the LLM can be fine-tuned for specific tasks, such as summarization or question answering, by providing it with additional examples related to that task. However, even after training, LLMs do not "understand" language in the way humans do – they rely on patterns and statistical correlations rather than true comprehension.

What are multimodal LLMs?

Multimodal Large Language Models (LLMs) are advanced versions of standard LLMs that can process and generate content across multiple types of data, such as text, images, audio, and even video. While traditional LLMs are designed to work exclusively with text-based data, multimodal LLMs are capable of understanding and synthesizing information from different modes or mediums.

This ability makes them more versatile, as they can handle more complex tasks that require the integration of various data types, such as describing an image, generating captions, or answering questions about visual content.

For example, a multimodal model can process an image alongside text and provide a detailed response, like identifying objects in the image or understanding how the text relates to visual content. This opens up applications in areas such as computer vision, language understanding, and cross-modal reasoning.

Use Cases for LLMs

1. Customer Support and Virtual Assistants

LLMs are widely used in chatbots and virtual assistants to handle customer inquiries, provide product recommendations, or troubleshoot issues. By analyzing customer input, LLMs can generate relevant responses in real time, reducing the need for human intervention. For example, virtual assistants like Siri, Alexa, or Google Assistant use LLMs to process natural language queries and provide useful information or execute tasks such as setting reminders or controlling smart home devices.

2. Content Creation and Writing Assistance

LLMs can generate articles, blog posts, and other forms of written content. They are used by content creators to assist with brainstorming ideas, writing drafts, or even editing text for grammar and style improvements. Tools like GPT-powered writing assistants can help marketers generate product descriptions, social media posts, and ad copy more efficiently. Additionally, LLMs can assist writers in generating creative content like poetry, stories, or screenplays.

3. Language Translation

LLMs have revolutionized language translation by providing accurate and context-aware translations across multiple languages. Services like Google Translate and DeepL leverage LLMs to improve the quality and fluency of translations by understanding not just individual words but the meaning behind sentences. These models are capable of translating idiomatic expressions and culturally specific phrases with greater accuracy than earlier rule-based systems.

4. Healthcare Applications

In healthcare, LLMs are being used to analyze clinical notes, research papers, and patient records. They can assist medical professionals by summarizing patient histories, flagging potential issues, or even generating medical reports. Additionally, LLMs help in drug discovery by reading and synthesizing vast amounts of biomedical literature, aiding researchers in identifying potential treatments or understanding complex medical concepts.

5. Legal Document Analysis

LLMs are increasingly used in the legal sector for tasks like document review, contract analysis, and legal research. They can quickly scan large volumes of legal documents, extract relevant clauses, summarize legal precedents, and identify inconsistencies, saving time and reducing the workload for lawyers. Some platforms also use LLMs to draft legal documents like contracts and briefs.

Examples of LLMs

1. GPT Series (Generative Pre-trained Transformer)

- Developed by: OpenAI

- Examples: GPT-3, GPT-4, GPT-4o

- GPT models are among the most widely recognized LLMs. They are transformer-based models designed to generate human-like text based on input prompts. GPT-3, for example, has 175 billion parameters and is used in numerous applications, such as chatbots, content creation, and virtual assistants. GPT-4o further improved on its predecessor, offering enhanced reasoning capabilities and supporting multimodal input (both text and images).

2. Gemini

- Developed by: Google DeepMind

- Applications: Multimodal tasks, general-purpose text generation, reasoning, and understanding.

- Overview: Gemini is Google's latest multimodal AI model. It was released earlier this year by DeepMind to merge the capabilities of large language models with powerful reasoning and advanced multimodal functionalities. This model integrates the strengths of LLMs for natural language processing and generation with the ability to handle various data types, such as images and text. Gemini aims to surpass previous models in terms of complex reasoning, problem-solving, and performing a broad range of AI tasks with both text and visual input, positioning it as a competitor to other multimodal systems like GPT-4 and PaLM.

3. Claude

- Developed by: Anthropic

- Applications: Conversational agents, ethical AI research, and general-purpose text generation.

- Claude is another LLM designed for natural language understanding and generation. Developed by Anthropic, Claude emphasizes ethical AI principles, aiming to generate safe and aligned outputs while minimizing the risks of harmful content generation.

4. LlaMa (Large Language Model Meta AI)

- Developed by: Meta

- Applications: Research in NLP, general text-based tasks.

- LLAMA is a family of language models released by Meta for research purposes. LLAMA models are designed to be efficient and accessible to researchers, with smaller versions (e.g., LLAMA-13B) offering competitive performance compared to much larger models like GPT-3. The most recent version is LLaMa 3, which is around as powerful as GPT-4.

5. Mistral

- Developed by: Mistral (a European AI company)

- Applications: General-purpose language understanding and generation.

- Mistral, a newer LLM developed by a European AI startup, is notable for its high performance despite being smaller in size compared to some other models. Mistral emphasizes efficient processing without sacrificing the quality of text generation.

6. Grok

- Developed by: xAI (Elon Musk's company)

- Applications: Currently integrated with X for language understanding and generation tasks.

- Grok is a newly developed LLM aimed at enhancing social media interactions and improving engagement through conversational AI. It was recently launched as part of a collaboration between xAI and X, emphasizing practical applications in social media.