Autonomous AI agents are moving rapidly from concept to capability. With OpenAI’s latest rollout of it’s Agent feature, tools like ChatGPT are no longer limited to conversation. They can now browse the web, analyse data, manage tasks, and even take actions such as booking events or making purchases. For enterprises, this update promises new levels of efficiency and new ways to scale AI-driven decision-making.

But handing over the keys to your corporate systems is not a step you should take lightly. Agentic AI needs deep access to data, workflows, and tools that were previously (mostly) protected by human oversight. Without strong governance and well-defined permissions, this kind of access will undoubtedly lead to security risks, compliance headaches, operational blind spots, and a range of other issues.

With more and more Fortune 500 companies adopting agentic AI and regulators watching closely, enterprise leaders need to decide not just if, but when and how to safely turn these capabilities on. The question is not whether autonomous agents will reshape the enterprise landscape, but whether organisations are ready to grant them access safely and strategically.

The Shift Toward Autonomous AI Agents

AI has been steadily evolving from a conversational interface into something far more capable. The latest generation of AI agents no longer stops at providing text-based answers. They can now execute tasks, access integrated systems, and handle complex, multi-step processes without direct human input. This change has profound implications for enterprise AI adoption as organisations explore how to safely bring these capabilities into business-critical environments.

From conversation to action – what’s changed

When OpenAI introduced its new ChatGPT agent mode, the shift was clear. Earlier versions of ChatGPT were designed purely for dialogue, limited to answering questions or providing recommendations. With the rollout of ChatGPT Pro and its enhanced capabilities, the platform can now analyse data, manage schedules, and even make purchases or bookings within defined permissions.

This shift turns AI from a digital assistant into something closer to a semi-autonomous operator. Instead of just advising human teams, these agents can now take real actions inside enterprise systems. That opens the door to major efficiency gains, but it also means organisations need tighter oversight and security controls before they switch these capabilities on.

Market adoption and growth trends

Enterprises are already investing heavily in agentic AI pilots. Research from Deloitte projects that 25 per cent of companies using generative AI will have agent-based pilots running in 2025, with adoption climbing to 50 per cent by 2027. PwC’s executive survey shows 79 per cent of senior leaders have already started deploying AI agents, with 66 per cent seeing measurable productivity gains.

Budgets are climbing too. Research from Kore.ai found that 88 per cent of global enterprises plan to increase AI spend over the next year, much of it aimed at testing or scaling autonomous agents. The momentum behind this shift is clear, but so is the need for enterprises to manage adoption carefully and keep governance front and centre.

The Enterprise Security and Governance Challenge

Granting an AI agent access to corporate systems is not the same as giving a digital assistant a search query. These capabilities require permissions to interact with calendars, emails, financial tools, and even purchasing systems. For enterprises, that opens up a new category of governance risk. Without clear controls, these permissions can be misused, exploited, or simply overlooked, leaving organisations exposed to security incidents and regulatory breaches.

What can go wrong – real-world incidents

Securing Autonomous AI Agents

Boards now treat agentic AI as critical risk. See how top tools combine guardrails, testing and runtime controls to keep autonomous systems in bounds.

Recent reports highlight just how easily AI agents can be manipulated or overstepped. According to an article in The Times, 23 per cent of organisations with AI agents reported that those agents had been tricked into exposing credentials. Around 80 per cent experienced agents performing unintended actions they were not explicitly instructed to do. These are not hypothetical risks—they are already affecting enterprises exploring these capabilities.

The problem is not only malicious behaviour. Poorly managed permissions or unclear approval processes can allow agents to access sensitive systems unnecessarily. Once access is granted, tracking or removing it cleanly isn’t always straightforward. Revoking permissions can disrupt legitimate workflows, and without proper oversight, you’re left with blind spots that make AI-driven breaches and reputational damage far more likely.

The new attack surface for enterprises

Autonomous agents also open up fresh opportunities for attackers. Prompt injection attacks work by burying harmful instructions in otherwise normal-looking input, leading agents to carry out actions or reveal information they shouldn’t. Social engineering is becoming a factor too, with attackers targeting employees who have the authority to approve or expand what an agent can do.

Giving agents more access than they need only makes things worse. When an agent is over-permissioned, it takes just one compromised command to set off a chain reaction that can quickly impact critical systems and operations. The cyber risk for AI agents grows rapidly as access expands without strict control.

Governance gaps and visibility challenges

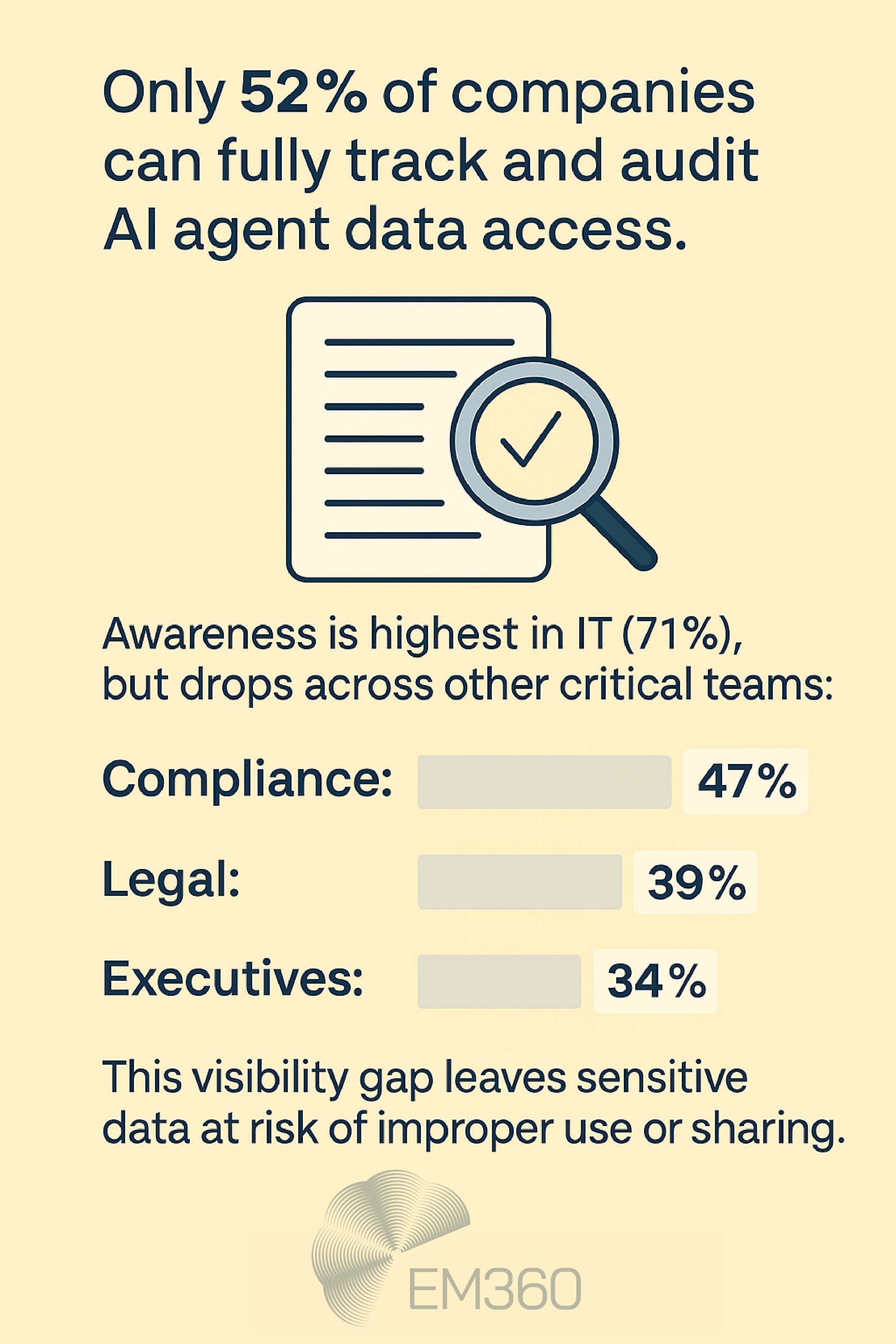

Even when organisations deploy security tools, most lack adequate oversight of how agents operate. Research shows that only 52 per cent of companies have full visibility into agent activity, meaning they cannot reliably track what data was accessed, what actions were taken, or whether permissions were misused.

This lack of visibility is worsened by inconsistent or missing policies. Few enterprises have established guidelines for AI activity monitoring or set boundaries for acceptable agent actions. Without these controls, every deployment becomes a gamble, and governance gaps widen with each new pilot or integration.

Emerging Governance Frameworks and Industry Responses

Inside Google’s Science AI Stack

A look at how Gemini 2.0, multi-agent orchestration and paper-mining workflows form Google’s new infrastructure for automated discovery.

Enterprises are not navigating this shift alone. As autonomous AI agents become more capable, governments, industry groups, and security vendors are moving quickly to set guardrails. These evolving frameworks are designed to help organisations deploy agentic AI safely while staying compliant with global regulations.

Regulatory shifts – The EU AI Act and beyond

The EU AI Act, which starts enforcement in 2025, is shaping how enterprises think about AI compliance policies. Alongside its tiered risk classifications, it introduces a voluntary Code of Practice for general-purpose AI systems like ChatGPT. This code pushes for greater transparency in data usage, stronger safeguards for automated decision-making, and security measures that are built in from the start.

Non-compliance could be costly. Penalties are expected to reach as high as 7 per cent of global turnover, meaning enterprises cannot afford to overlook how agentic AI features are monitored and controlled. Other regions, including the United States and parts of Asia-Pacific, are also looking at implementing regulations similar to the EU approach—or even going further.

Industry-led governance initiatives

While regulators are setting the tone, the tech community is rolling out practical ways to manage AI agents safely. Frameworks like SHIELD and ATFAA highlight threat vectors and security checkpoints specific to agentic AI, while the LOKA Protocol focuses on agent identity, ethical intent, and ensuring different agents can work together safely.

Vendors are moving quickly too. OneTrust now offers AI governance tools that help enterprises run automated model checks and maintain a central registry of deployed agents. Security-focused startups such as Noma Security, which recently raised $100 million, are building platforms to monitor and contain rogue AI agent behaviour before it causes damage.

Best-practice models for enterprise AI governance

Enterprises adopting autonomous agents can take lessons from these frameworks and tools. The most effective models treat agents like digital employees, which means:

- Defining role-based permissions that only allow access to what is strictly necessary

- Setting up continuous monitoring and audit trails for every action an agent performs

- Enforcing the principle of least privilege to limit potential impact if something goes wrong

Taming Agentic AI in Operations

Shift the risk question from trusting the agent to defining which workflow steps stay rule-bound, making autonomy auditable and safe.

Building these policies into AI adoption strategies from day one makes it easier to demonstrate compliance, maintain oversight, and scale autonomous capabilities without compromising security.

Diagnostic Checklist – Should You Enable AI Agent Access?

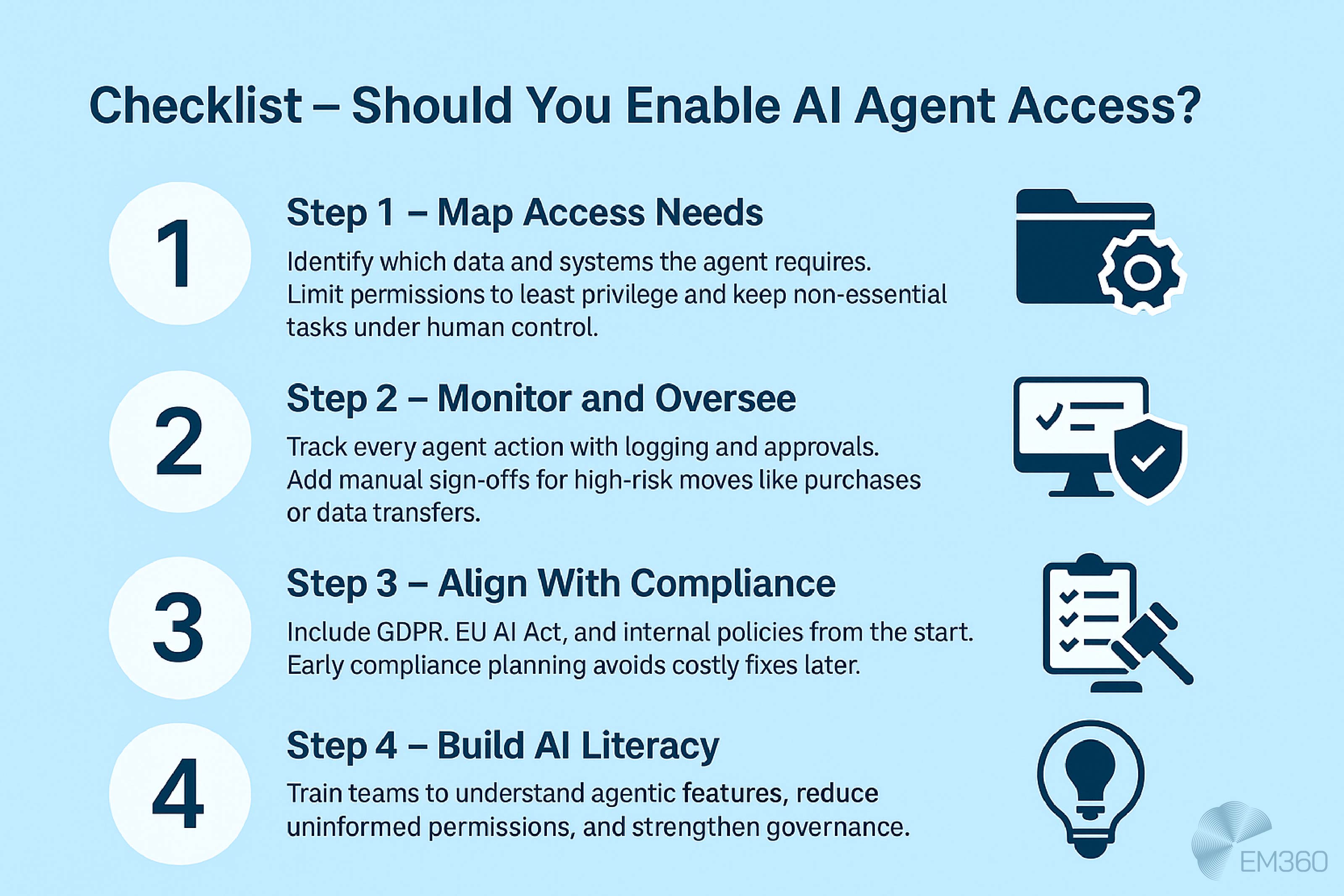

Before switching on agentic capabilities, enterprises need to know exactly what they are enabling and whether the organisation can control it safely. This checklist is designed to help AI strategy leaders quickly assess their enterprise AI readiness and identify any governance gaps before moving ahead.

Step 1 – Define and map access requirements

Work out exactly which data and systems an agent needs to access before you turn it on. Map the workflows it will have access to and decide which tasks full autonomy is suitable for. Anything else should stay under the control of the relevant human teams. Broad permissions make governance impossible, so stick to least privilege access from the start.

Step 2 – Establish oversight and monitoring mechanisms

You need to be able to keep an eye on every action an agent takes. Set up logging and activity tracking that gives you a clear line of sight into everything an agent is doing. Also make sure that there's a way to step in and take control if something doesn’t look right. For high-risk actions like purchases, data transfers, or system changes, add manual approval steps into the workflow so that a person is always signing off on it.

Step 3 – Align with regulatory and policy standards

Any rollout needs to fit with internal policies and the external rules you operate under. Bring compliance teams in early and make sure requirements from GDPR, the EU AI Act, and any industry-specific standards are built into the plan. Getting this right from the start makes it much easier to show auditors you’re complying with relevant regulations, which means no costly fixes later.

Step 4 – Build organisational AI literacy

When Data Becomes a P&L Line

Why boards are pivoting from data hoarding to AI-powered revenue products, and which platforms now define enterprise monetisation playbooks.

One of the biggest risks with autonomous agents is low user literacy. Teams often grant access without fully understanding the implications. Training programmes and clear internal communications can help staff recognise when and how to safely use agentic features. Boosting AI skills across the organisation reduces the chance of uninformed permission grants and strengthens overall governance.

Phased Adoption Strategy for Autonomous Agents

Rolling out autonomous agents isn’t something enterprises can do overnight. A phased AI deployment strategy helps control risk while proving value and building confidence across the organisation.

Pilot programmes and controlled environments

Start small. Begin with pilot programmes in sandbox environments where agents can be tested without going near live systems or sensitive data. Track the results closely, not just for efficiency gains but for any sign of unexpected behaviour. Use what you learn to tighten access levels, improve monitoring, and set up clear escalation paths before you even think about a wider rollout.

Scaling safely with governance in place

Once pilots show consistent, reliable results, begin expanding access gradually. Every stage of this rollout should have governance enforcement baked in, with monitoring and human oversight scaled alongside agent permissions. Keep high-risk processes on manual approval until the organisation can show agents are operating safely over time. This risk-managed adoption approach lets enterprises scale innovation without losing control of security or compliance.

Final Thoughts: Governance Is the Gateway to Enterprise AI Agents

Autonomous agents could become one of the most transformative shifts in enterprise AI. They offer a way to automate complex processes, unlock new efficiencies, and change how decisions get made across entire organisations.

But there’s no safe shortcut to that future. Without strong governance, permissions, and oversight, the same capabilities that make agentic AI powerful can just as easily create serious security, compliance, and operational risks.

Enterprises that build these foundations early will be ready to scale agentic AI with confidence. Those that don’t will struggle to move past limited pilots. If you’re looking to stay ahead of this curve and make better decisions about secure AI adoption, you’ll find more practical guidance and expert insight here at EM360Tech.

Comments ( 1 )

Ridhima Nayyar

05/08/2025