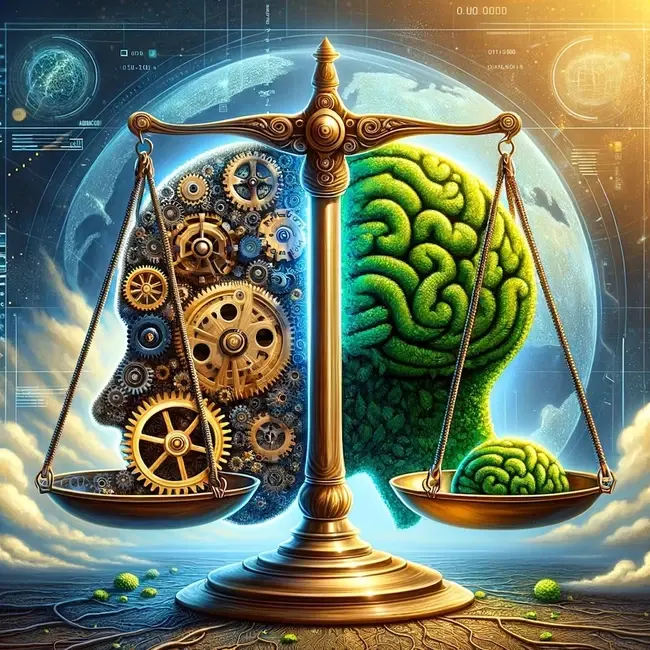

As artificial intelligence (AI) continues to advance at an astonishing pace, businesses increasingly integrate AI technologies into their strategies to drive innovation, optimize processes, and gain a competitive advantage. However, as AI becomes more sophisticated and ubiquitous, it is crucial for organizations to consider the ethical implications of their AI-driven decisions and actions. Balancing the pursuit of innovation with the responsibility to develop and deploy AI systems in an ethical manner is a complex challenge that requires careful consideration and proactive measures.

The development and implementation of AI technologies raise a multitude of ethical concerns, ranging from issues of privacy, bias, and transparency to questions of accountability, fairness, and the potential impact on society as a whole. As AI systems become more autonomous and influential in decision-making processes, it is essential for businesses to establish clear ethical guidelines and frameworks to ensure that their AI initiatives align with societal values and promote the well-being of all stakeholders.

Integrating ethical considerations into business strategies involving AI is essential for a successful comprehensive AI strategy. There are a number of ethical challenges associated with AI adoption, but by understanding the potential risks and benefits, organizations can navigate the complexities of ethical AI implementation. By proactively addressing ethical concerns and embedding responsible practices into their AI strategies, businesses can foster trust, mitigate risks, and create sustainable value for both their organizations and society as a whole. Here are some key points to remember:

Ethical Considerations:

Bias and Discrimination: AI systems can perpetuate societal biases if trained on data reflecting those biases. This can lead to discriminatory practices in hiring, loan approvals, and more. Businesses need diverse datasets and algorithms that can detect and mitigate bias.

Transparency and Accountability: Many AI systems are "black boxes," making it difficult to understand their decision-making process. This lack of transparency can raise concerns about fairness and accountability. Businesses should strive for explainable AI that allows human oversight and intervention.

Privacy and Data Security: AI generally relies on massive datasets, which raises privacy concerns. Businesses must ensure user data is collected, stored, and used responsibly, complying with data privacy regulations and mitigating reputational risks.

Implementing Responsible AI:

Data Governance: Establish clear policies for data collection, use, and storage. Prioritize data anonymization and user consent.

Human in the Loop: AI should complement human decision-making, not replace it. Human in the loop policies involve setting parameters, monitoring outcomes, and intervening when necessary.

Algorithmic Auditing: Regularly assess AI systems for bias and fairness. Use diverse teams to identify potential issues and develop mitigation strategies.

Impact on Privacy and Data Security:

Data Collection: Businesses should be transparent about the data they collect and how it's used for AI development. Users should have control over their data and be able to opt-out.

Data Security: Robust cybersecurity measures are essential to protect sensitive data from breaches and misuse.

Algorithmic Bias: Biased algorithms can lead to discriminatory practices like unfair pricing or targeted advertising. Businesses need to be vigilant about identifying and mitigating such biases.

Maintaining Ethical Standards:

Develop an AI Ethics Framework: Create a set of principles guiding AI development and use within your business. This framework should consider fairness, transparency, privacy, and accountability. (See section below for more details on building an ethics framework)

Foster a Culture of Ethics: Educate employees about ethical considerations in AI and encourage responsible data practices.

Stay Informed: Keep up-to-date on evolving regulations and best practices for ethical AI use.

AI Ethics Framework

A comprehensive AI Ethics framework for businesses should include the following key components:

Guiding Principles: Establish a set of core ethical principles that guide the development, deployment, and use of AI systems within the organization. These principles may include fairness, transparency, accountability, privacy protection, and respect for human rights.

Governance Structure: Define a clear governance structure that outlines roles, responsibilities, and decision-making processes related to AI ethics. This should involve the creation of an AI ethics committee or board, which includes diverse stakeholders from various departments and backgrounds.

Risk Assessment: Implement a robust risk assessment process to identify and evaluate potential ethical risks associated with AI systems. This should include assessing the impact on individuals, society, and the environment, as well as considering unintended consequences and long-term implications.

Data Management: Establish guidelines for the ethical collection, storage, and use of data in AI systems. This should cover aspects such as data privacy, security, consent, and bias mitigation in datasets.

Algorithmic Fairness: Ensure that AI algorithms are designed and tested to minimize bias and discrimination. Regularly audit and monitor AI systems for fairness and take corrective actions when necessary.

Transparency and Explainability: Promote transparency by providing clear explanations of how AI systems make decisions and predictions. This helps build trust with stakeholders and enables accountability.

Human Oversight: Maintain human oversight and control over AI systems, especially in high-stakes decisions. Ensure that there are mechanisms in place for human intervention and the ability to override AI-based decisions when necessary.

Continuous Monitoring and Improvement: Regularly monitor and assess the performance and impact of AI systems to identify and address any emerging ethical issues. Foster a culture of continuous learning and improvement in AI ethics practices.

Stakeholder Engagement: Engage with internal and external stakeholders, including employees, customers, regulators, and the wider public, to gather feedback, address concerns, and ensure alignment with societal values and expectations.

Training and Awareness: Provide training and awareness programs to employees involved in the development, deployment, and use of AI systems. This helps foster a shared understanding of AI ethics principles and practices across the organization.

Accountability and Remediation: Establish clear accountability measures and processes for addressing and remedying any ethical breaches or adverse impacts caused by AI systems.

By incorporating these components into an AI Ethics framework, businesses can proactively address ethical challenges, build trust with stakeholders, and ensure the responsible development and use of AI technologies.