Have you ever wondered how machines learn to play games or navigate dynamic environments?

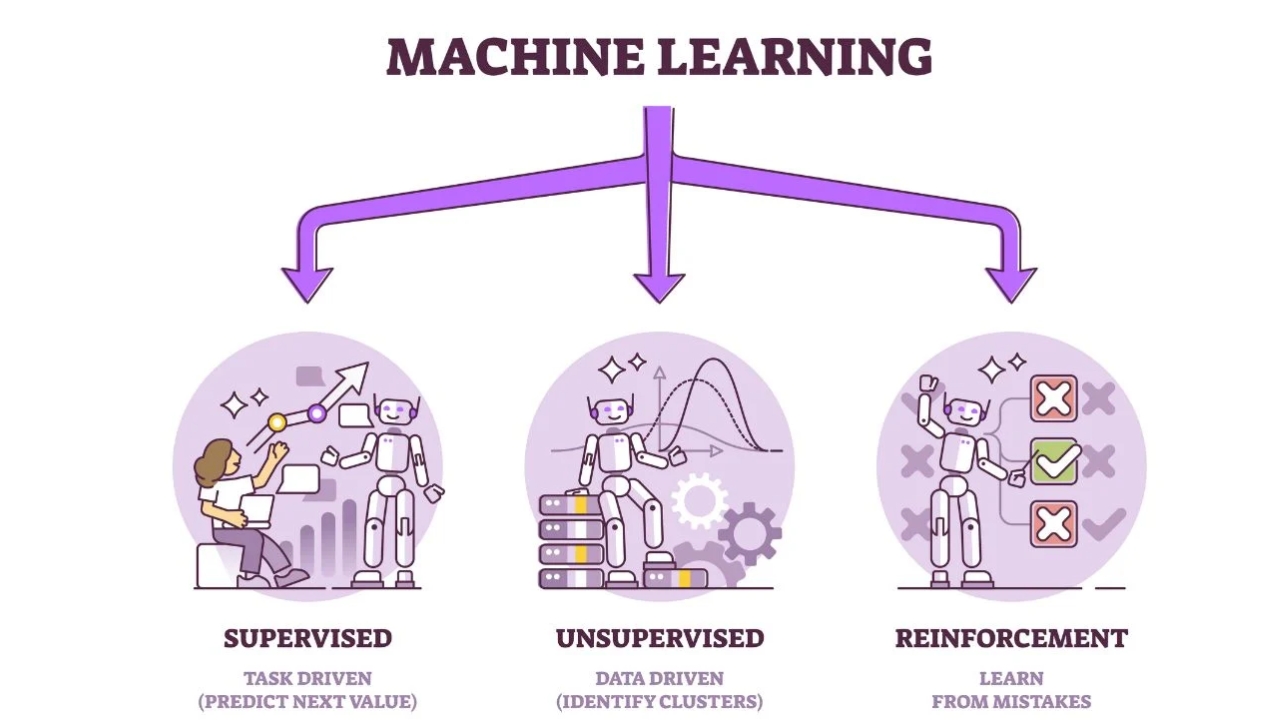

Q-learning helps agents make optimal decisions by estimating the long-term value of their action. It's a powerful tool in Reinforcement Learning (RL), a technique where agents learn through trial and error.

In this article, we explore how Q-learning works, its connection to Reinforcement Learning, Q-Value, The Bellman Equation, and how Q-learning is being used in real-world applications.

What is Q-Learning?

Q-learning is a type of reinforcement learning algorithm where the agent isn't explicitly told what the right things to do are, but instead learns by receiving rewards for good actions and penalties for bad actions.

It’s a type of machine learning where an agent learns through trial and error in an environment, without explicit instructions.

The agent doesn't need a complete model of the environment to learn. It learns by directly interacting with the environment and receiving rewards for its actions.

Q-learning is an algorithm for learning an action-value function. This function tells the agent how good it is to take a specific action in a particular state, considering the expected future rewards. Q-learning is used within the field of reinforcement learning (RL).

What is the role of Q-learnign in Reinforcement Learning (RL)?

Q-learning is a fundamental algorithm in the field of reinforcement learning, allowing an agent to learn the best course of action to take in a given situation (state) in order to maximize future rewards.

The goal of Reinforcement learning is for the agent to learn an optimal policy. This policy essentially dictates the best course of action for the agent to take in any situation within the environment to maximize its total reward over time.

Read: What is Reinforcement Learning?

Q-learning is a powerful tool within the realm of RL, helping agents navigate complex environments and make optimal decisions based on the information they gather.

Unlike some other RL methods, Q-learning doesn't require a pre-defined model of the environment. It learns through trial and error, interacting with the environment and receiving rewards (or penalties) for its actions.

By continuously updating a Q-value table that stores these action-state values, the agent learns which actions lead to the most desirable outcomes (highest rewards) in the long run. This enables it to make increasingly optimal decisions as it interacts more with the environment.

What is a Q-Value?

A Q-value represents the expected future reward an agent can get by taking a specific action in a particular state.

It's not just about the immediate reward from that action, but also the potential rewards the agent might gain in the future based on the choices it makes after taking that initial action.

A key challenge in Q-learning is striking a balance between exploration and exploitation:

- Exploration: The agent needs to try different actions in different states to learn their true Q-values. This helps it discover potentially better options.

- Exploitation: Once the agent has some experience, it should ideally choose the actions with the highest Q-values (exploitation), which are likely to lead to the most reward in the long run.

While powerful, Q-learning has some limitations:

- Curse of dimensionality: With very large or complex state spaces, the number of Q-values to learn can become enormous. This can make it impractical or slow for the agent to learn effectively.

- Non-stationarity: If the environment changes over time (rewards or actions available in certain states change), the learned Q-values might become outdated.

Researchers have developed advanced techniques to address these limitations:

- Deep Q-learning: This combines Q-learning with neural networks, allowing the agent to learn Q-values for complex state spaces more efficiently.

- Prioritized experience replay: This technique prioritizes updating Q-values for surprising or important experiences, making the learning process more efficient.

What is the Bellman equation?

The Bellman equation is a fundamental formula in reinforcement learning that helps an agent estimate the long-term value of taking a specific action in a particular state, considering both the immediate reward and the discounted value of the best future state achievable from that action.

The Bellman equation helps the agent figure out the best action to take by considering two things:

- Immediate Reward (R): This is the reward the agent gets right after taking an action.

- Expected Future Reward: This isn't just about the next reward, but the total reward the agent expects to get in the long run after taking that action and making future choices.

Examples of Q-Learning

Q-learning has been instrumental in training AI agents to play games at a superhuman level. AlphaGo, the program that defeated the world champion in Go, used a deep Q-learning variant to achieve mastery. Similarly, Q-learning has been used to train agents to play Atari games with impressive results.

Q-learning can be used to train robots to perform tasks in complex environments. For instance, robots can learn to navigate obstacles, manipulate objects, or walk on uneven terrain through trial and error using Q-learning algorithms.

In tasks like network traffic control or server load balancing, Q-learning can help optimize resource allocation. The algorithm can learn to distribute resources (bandwidth, processing power) based on real-time data and achieve better performance.

Recommendation systems can leverage Q-learning to personalize user experiences. By learning from user interactions and feedback (rewards), the system can recommend items (movies, products) that users are more likely to engage with in the future.

Q-learning algorithms are currently being explored for developing trading strategies in financial markets. The agent can learn to make investment decisions by analyzing historical data and market conditions, aiming to maximize returns.

Q-learning offers a powerful approach for tasks where an agent needs to learn optimal strategies through trial and error in dynamic environments. As research continues to advance, we can expect even more innovative applications of Q-learning in the future.