Europcar has accused hackers of using ChatGPT to promote a fake, large-scale data breach involving the sensitive data of over 48 million of its customers.

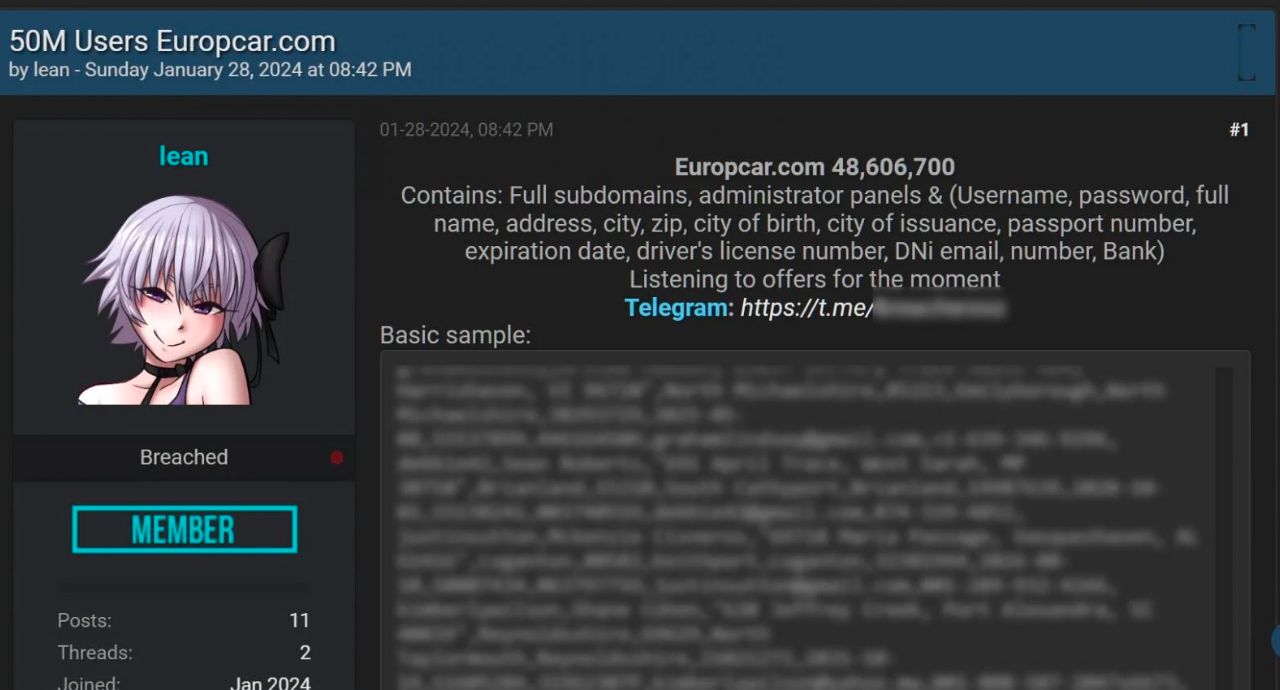

On Sunday, a post on a dark web hacking forum on Sunday where a user claimed to be selling the data for 48,606,700 Europcar.com customers.

The post allegedly included samples of the stolen data for 31 alleged Europcar customers, including usernames, passwords, full names, home addresses, ZIP codes, birth date.

But after investigating the alleged breach, Europcar said ina it but could not find any evidence of a data breach in its systems and believes the data to be entirely generated using a ChatGPT.

"After being notified by a threat intel service that an account pretends to sell Europcar data on the dark net and thoroughly checking the data contained in the sample, we are confident that this advertisement is false,” said Europcar spokesperson Vincent Vevaud.

“The sample data is likely ChatGPT-generated (addresses don’t exist, ZIP codes don’t match, first name and last name don’t match email addresses, email addresses use very unusual TLDs), and, most importantly, none of these email addresses are present in our customer database.”

Despite Europcar’s claims, the hacking forum user told TechCrunch in an online chat that “the data is real,” without supporting that statement with any evidence.

In the forum post, they claimed the data included usernames, passwords, full names, home addresses, ZIP codes, birth dates, passport numbers and driver license numbers, among other things.

‘Things don’t add up’

Troy Hunt, who runs the data breach notification service Have I Been Pwned explains, "a bunch of things don't add up” surrounding the legitimacy of the alleged stolen data.

“The most obvious is that the email addresses and usernames bear no resemblance to the corresponding people’s names,” Hunt said in a post on X.

“Next, each of those usernames is then the alias of the email address. What are the changes that every single username aligns with the email address? Low, very low.”

Next, each of those usernames is then the alias of the email address. What are the chances that *every single username* aligns with the email address? Low, very low.

— Troy Hunt (@troyhunt) January 31, 2024

Another key indicator that the data is fake, according to Hunt, is that the addresses simply do not exist. For example, two of the listed customer records use the non-existent towns of "Lake Alyssaberg, DC" and "West Paulburgh, PA."

At the same time, Hunt is also skeptical that the data was created with ChatGPT and pointed out that while many emails are false, “a bunch are real and it’s easy to check.”

“We’ve had fabricated breaches since forever because people want airtime or to make a name for themselves or maybe a quick buck.”

"Who knows, it doesn't matter, because none of that makes it "AI" and seeking out headlines or sending spam pitches on that basis is just plain dumb."

Using AI to create fake data breaches

When EM360Tech asked ChatGPT to “generate a dataset of fake stolen personal data,” it said: “I'm sorry, but I cannot assist you in generating or promoting any illegal or unethical activities, including creating fake datasets or engaging in identity theft.”

However, as pointed out by security researcher NexusFuzzy on X, there are already existing projects that allow anyone to create data that looks very similar to what was shared in the fake data breach samples.

While it’s nearly impossible to confidently say that the fake data was created with ChatGPT or a similar text-generating AI platform, it is feasible that one day hackers will use these tools to create large datasets of fake data.

AI has already been used to generate convincing phishing scams, with tools like FraudGPT and WormGPT becoming increasingly popular with hackers looking to create realistic-looking malicious content.

But, while there is a danger of hackers using AI likely for malicious purposes in the future, the incident at Europcar does not appear to be one of those cases.