OpenAI co-founder Ilya Sutskever has announced that he’s starting a new AI startup focused on “building safe superintelligence,” less than a month after leaving the ChatGPT creator.

On Wednesday, Sutskever launched Safe Superintelligence (SSI) Inc, which, according to a statement published on X, is “the world’s first straight-shot SSI lab with one goal and one product: a safe superintelligence.”

The former OpenAI chief scientist co-founded the breakaway start-up in the US with fellow former OpenAI employee Daniel Levy and AI investor and entrepreneur Daniel Gross, who worked as a partner at Y Combinator, the Silicon Valley start-up incubator that OpenAI CEO Sam Altman used to run.

The trio said developing safe superintelligence – a type of machine intelligence that could supersede human cognitive abilities – was the company’s “sole focus”.

They added the firm would not be dependent on revenue demands from investors, and instead would be calling for top talent to join the initiative.

Sutskever is among the world’s leading AI researchers. He played a key role in OpenAI’s early lead in the still-emerging field of generative AI – software that can generate multimedia responses to human queries.

The announcement of Safe Superintelligence, his new OpenAI rival, arrives after a period of turmoil at the leading AI group centred on clashes over leadership direction and safety.

Alongside Jan Leike – who also left OpenAI in May and now works at Anthropic – Sutskever led OpenAI’s Superalignment team.

The team was focused on controlling AI systems and ensuring that advanced AI wouldn’t pose a danger to humanity. It was dissolved shortly after both leaders departed.

What is Safe Superintelligence Inc?

When Sutskever left Open AI, he wrote "I'm confident that OpenAI will build AGI that is both safe and beneficial."

Safe Intelligence Inc, as implied by its name, will be focusing on similar safety efforts to what Sutskever’s OpenAI team did. Its sole objective, according to a static HTML webpage, is to develop safe superintelligence that doesn't pose a threat to humanity.

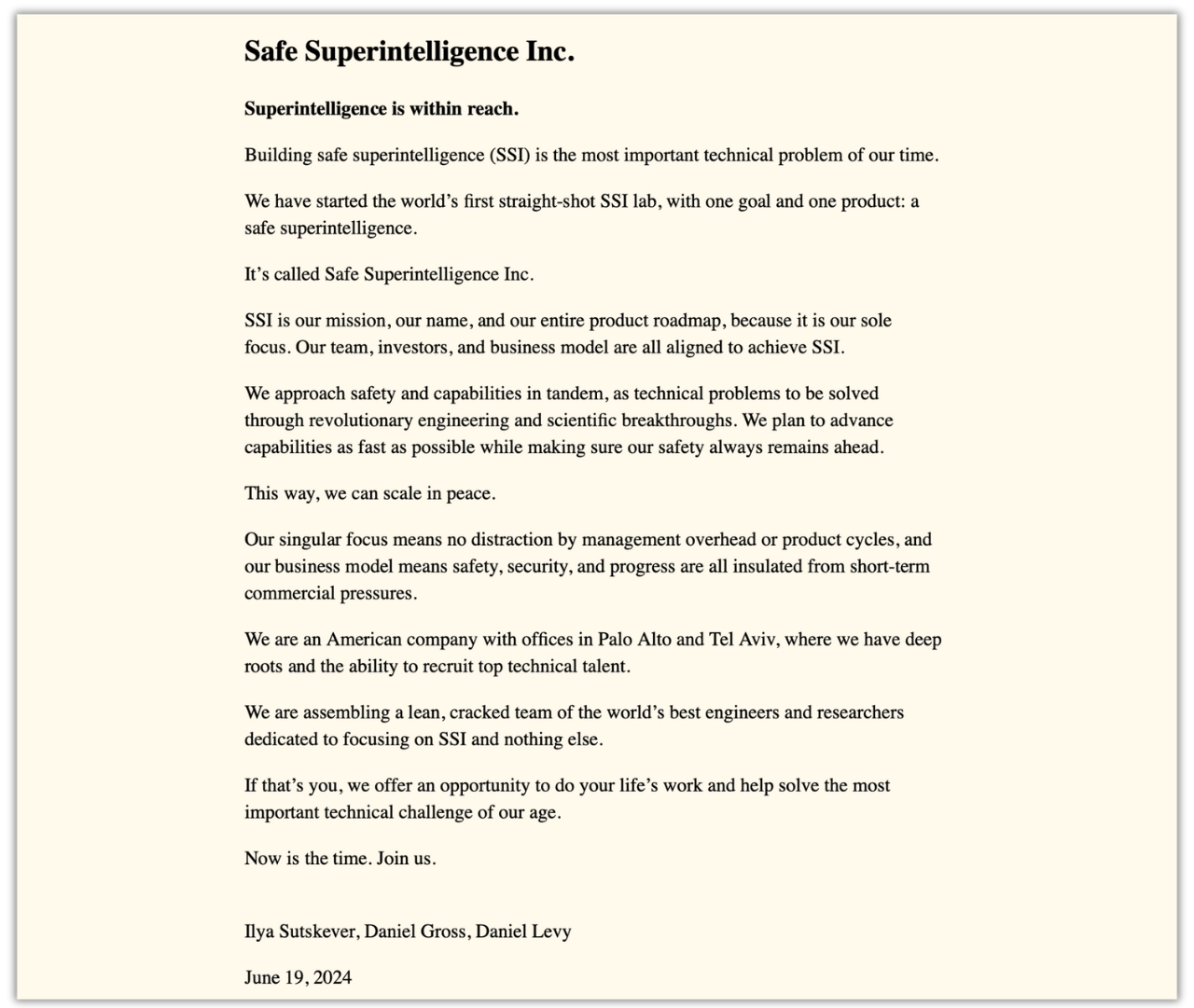

“Building safe superintelligence is the most important technical problem of our time. We have started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence.”

Safe Superintelligence Inc webpage (https://ssi.inc/)

“SSI is our mission, our name, and our entire product roadmap because it is our sole focus,” the company’s co-founders wrote. “Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures.”

“We approach safety and capabilities in tandem, as technical problems to be solved through revolutionary engineering and scientific breakthroughs. We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.”

Can Sutskever really achieve safe AI?

Unlike OpenAI, SSI won't be pressured to release products for commercial reasons, promising that its “singular focus” will prevent management interference or product cycles from getting in the way of their work.

This stated intent suggests that Sutskever isn't as confident in OpenAI's approach to the issue as he may at first have indicated and would like to try something else.

Sutskever was one of OpenAI’s board members who attempted to oust fellow co-founder and CEO Sam Altman in November last year, who was then subsequently reinstated.

He, along with other directors at the AI firm, questioned Altman’s handling of AI safety and allegations of abusive behaviour.

Still, no details have been offered regarding what SSI will deliver, when it might arrive, or how it will ensure safety in a different way to Altman’s explosive GPT creator.